I think it's impossible to learn everything there is to know about rendering; certainly that state couldn't be achieved for a long time given how many things evolve at the same time and how deep one can go on any one area or platform. I've been spending a fair amount of time working on rendering systems on Meta Quest devices over the last year and change. Consolidating some of this seems like it might be a useful resource for others (including future me), and so here we go.

In fact, this subject is so interesting, I'm willing to throw in some graphics. Very apropos.

Basics

- OpenXR Mobile SDK. I work the most at this level (rather than in Unity or Unreal specifically) and so it's good to get going at the right point. OpenXR APIs are what distinguish VR programming from regular mobile development.

- OpenXR Render Loop is written in particular ways for good reasons.

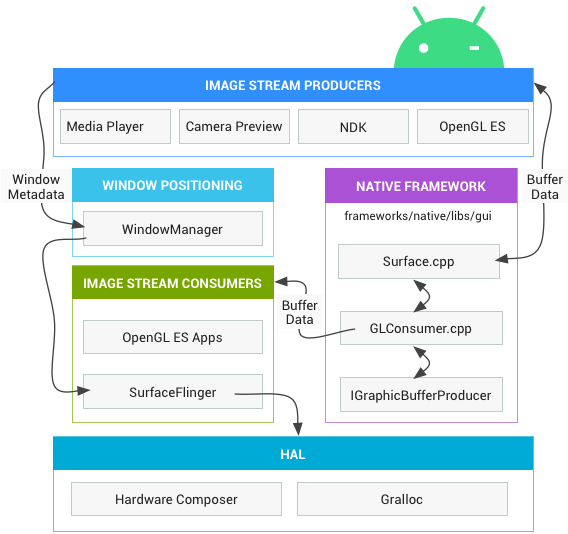

Android Rendering

- Graphics on the AOSP site has a pretty nice overview of the components and how they roughly layer. The Surface.cpp file referenced includes a cool global to poke around if that's your thing in the form of

sCachedSurfaces.

- Graphics Architecture is quite succinct but including references to each of the components referenced along with a brief description, and so it's a great point to jump into additional topics.

- Skia is the 2D library that you'll see mentions in regards to the 'canvas API'.

- ANGLE is a popular library that runs WebGL/OpenGL ES on top of other runtimes like Vulkan, desktop OpenGL, D3D9, D3D11, and Metal.

- OpenGL ES. OpenGL ES is a flavor of the OpenGL specification intended for embedded devices. 3.1 is supported by Android 5.0 (API level 21) and higher. 3.2 adds some additional features described in Appendix F - [Vulkan.

- Android Graphics '22 Talk. Talks about front-buffered platform support in Android 13. Simplifying blurs in Android 12 via a RenderEffect API. SurfaceView (basically use SurfaceView unless you'd doing some specific integration with the View hierarchy). AGSL (another not-quite-GLSL language!) on Android 13 for pixel shaders - a rename of the Skia shader language (SKSL).

- Android Game Development Kit (AGDK) update: Adaptability and performance features. Includes discussion of performance hint APIs and thermal APIs to get changes and forecasted thermal headroom.

GPU Specifics

- GPU architecture types explained by rastergrid, comparing classic GPUs with tile-based GPUs.

- Quest has a Qualcomm Snapdragon 835 and Quest2 a Qualcomm Snapdragon XR2. They both have 1MB of tile memory (note).

- On the XR2, where a tile will contain both the left and right eye views in a multi-view rendering pipeline, an application running 4xMSAA, 32bit color, and 24/8 depth/stencil buffer will have 96x176 tiles.

- QCOM_texture_foveated. Foveated rendering is a technique that aims to reduce fragment processing workload and bandwidth by reducing the average resolution of a render target. Perceived image quality is kept high by leaving the focal point of rendering at full resolution. Goes along with QCOM_framebuffer_foveated.

Rendering Specifics

OK, let's talk about ways to organize passes first. The Unity package actually lays out reasonable simple options across Rift and Quest.

- Rift / Quest - Multi Pass. Unity renders each eye independently by making two passes across the scene graph. Each pass has its own eye matrices and render target. Unity draws everything twice, which includes setting the graphics state for each pass. This is a slow and simple rendering method which doesn't require any special modification to shaders.

- Rift - Single Pass Instanced. Unity uses a texture array with two slices, and uses instanced draw calls (converting non-instanced draws call to instanced versions when necessary) to direct rendering to the appropriate texture slice. Custom shaders need to be modified for rendering in this mode. Use Unity's XR shader macros to simplify authoring custom shaders.

- Quest - Multiview. Multiview is essentially the same as the Single Pass Instanced option described above, except the graphics driver does the draw call conversion, requiring less work from the Unity engine. As with Single Pass Instanced, shaders need to be authored to enable Multiview. Using Unity's XR shader macros will simplify custom shader development.

Speaking of which Unity VR Optimization : Quest 2 Settings is a decent walk-through of settings to apply to a Unity project to tune it for Quest. Be sure to take a look at the top comments for a discussion of Linear vs Gamma colorspace.

Now, let's talk a bit more about the passes themselves. Again, people write a lot about Unity, so it's simple to find resources.

- Choosing Between Forward or Deferred Rendering Paths in Unity. An explanation focusing on differences and some Unity particulars / constraints.

Quest Specifics

- Performance and Optimization on Meta Quest Platform. From Meta Connect 2022. Covers eye-tracked foveated rendering (ETFR, supported on Quest Pro), symmetric FOV, application SpaceWarp, and memory optimization in Unity Vulkan, along with some perf tool updates (Perfetto and RenderDoc Meta Fork aka RenderDoc for Oculus) including instructions on how to get GPU information at different levels of detail, simpleperf, and a counters in RenderDoc.

- VK_QCOM_fragment_density_map_offset. This extension allows an application to specify offsets to a fragment density map attachment, changing the framebuffer location where density values are applied to without having to regenerate the fragment density map.

- The talk also describes how cores map to Perfetto traces; CPUs 0-3 are silver cores, CPU 7 is the gold prime or platinum core, and are reserved for system use. CPUs 4-6 are the gold cores, fully accessible to apps.

- Reference Spaces. Turns out that the head position / camera position is hugely important when you're doing VR. This really is an OpenXR concept though - if you play by OpenXR rules, you should be fine.

- Passthrough. Passthrough enables MR experiences by taking the world as seen through the device cameras and rendering it so it looks like the user is "seeing through" the device.

- An app cannot access images or videos of a user's physical environment. Instead, the application submits a special placeholder layer. This layer gets replaced with the actual Passthrough rendition by the XR Compositor.

- Performance measurements require some particulars, but the linked page includes instructions on how to lock the CPU and GPU levels and disable dynamic foveation for more consistent results.

Consider this a snapshot - these resources will evolve over time at my rendering wiki page.

Happy rendering!

Tags: graphics